Switch Collection too long to complete within 5 minutes ?

Hello,

Since a few days, I guess I have some connection problems with some Brocade switches are the logs are full of

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_09:50 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:10 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:15 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:20 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:25 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:35 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:50 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:55 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:00 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:05 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:10 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:30 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:35 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:40 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:10 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:15 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:20 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:25 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:35 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:50 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_10:55 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:00 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:05 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:10 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:30 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:35 : too many switch02 processes: sanperf.pl, exiting

/opt/appstor/stor2rrd_BO/bin/san_stor_load.sh: 2018-09-17_11:40 : too many switch02 processes: sanperf.pl, exiting

However, the config_check runs fine ...

Is there a way to get more detailed log about what is so slow that the collect takes more than 5 minutes ?

Is there a way to see how far the collect has gone ?

Thanks

Comments

-

Hi,do you see data in the GUI for them?

-

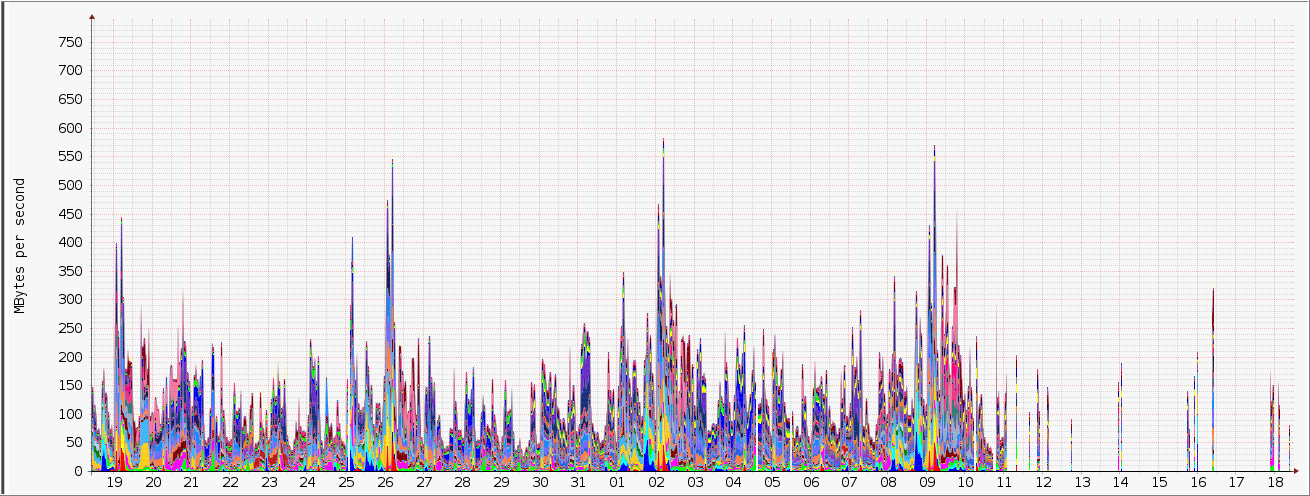

Only a few points :

I'm pretty sure it's a network issue, but maybe stor2rrd could be more verbose when there are timeouts in the snmpwalk ?

I'm pretty sure it's a network issue, but maybe stor2rrd could be more verbose when there are timeouts in the snmpwalk ? -

is that generall issue for all switches?

-

Yes for all switches within the same location, others work fine ...

-

Is that happen around 11/12th of Sep on all switches?Anything has been changed on stor2rrd side?send us logs for verification if there is any lack of system resource etc.

Note a short problem description in the text field of the upload form. cd /home/stor2rrd/stor2rrd # or where is your STOR2RRD working dir tar cvhf logs.tar logs etc tmp/*txt gzip -9 logs.tar Send us logs.tar.gz via https://upload.stor2rrd.com

-

Hi Pavel, mbayard

I'm experiencing were similar issue (same I would say). Did you come to any conclusion?

-

Hi,do you have the latest version 2.50?if you are on that version already then logs pls.

Note a short problem description in the text field of the upload form. cd /home/stor2rrd/stor2rrd # or where is your STOR2RRD working dir tar cvhf logs.tar logs etc tmp/*txt gzip -9 logs.tar Send us logs.tar.gz via https://upload.stor2rrd.com

-

Hi,there is an enhancement 2.50 which should resolve that.

-

Hi, this will resolve it:cd /home/lpar2rrd/lpar2rrd

vi etc/lpar2rrd.cfg

and replace you actual PERL5LIB by this:

PERL5LIB=/home/lpar2rrd/lpar2rrd/bin:/home/lpar2rrd/lpar2rrd/vmware-lib:/usr/share/perl5/vendor_perl:/usr/lib64/perl5/vendor_perl:/usr/share/perl5:/home/lpar2rrd/lpar2rrd/lib

Go to the GUI and confirm that VMware connection test is working.

-

sorry, wrong thread, ignore above

-

Hi,As Pavel said, always run the last version because it's always better

In my case this was due to a network issue between stor2rrd instance and the datacenter in which switches were located, network team found the problem and everything came back to normal after that

In my case this was due to a network issue between stor2rrd instance and the datacenter in which switches were located, network team found the problem and everything came back to normal after that Good luck !

Good luck ! -

sure, however 2.50 comes with optimization in the SAN backend which solves similar problem (it might happened even with a good connectivity).ok, your problem was on the infra level

Howdy, Stranger!

Categories

- 1.7K All Categories

- 116 XorMon

- 26 XorMon Original

- 175 LPAR2RRD

- 14 VMware

- 20 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle