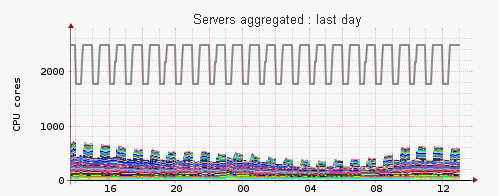

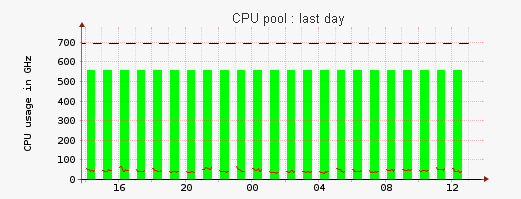

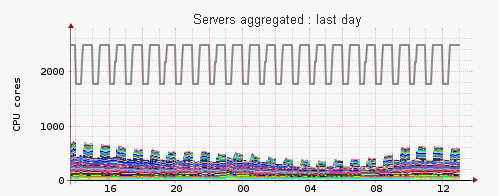

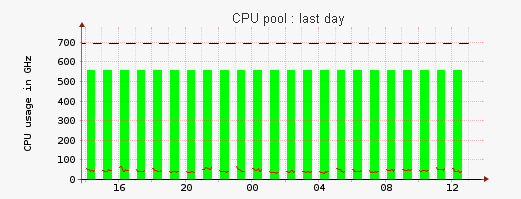

VMware graphs are dropping often

My Cluster CPU and memeory graphs are dropping often. Any reason for this ?

Comments

-

send us logs

Note a short problem description in the text field of the upload form. cd /home/lpar2rrd/lpar2rrd # or where is your LPAR2RRD working dir tar cvhf logs.tar logs etc tmp/*txt gzip -9 logs.tar Send us logs.tar.gz via https://upload.lpar2rrd.com

-

Did you find the reason? We have same issue.

-

Hi,we have no feedback.Basically problem is that data load is longer than an hour.It was quite huge environment.To speed up proccesing you can increase paralelization processing of ESXi.Normally there is 9 processes running to get ESXi data from each vCenter.Increase it to 20.cd /home/lpar2rrd/lpar2rrdvi etc/.magicVMWARE_PARALLEL_RUN=20

export VMWARE_PARALLEL_RUNLet it run.Does it help?

-

Hi Pavel,

(Version 5.07)

just found this old thread.I think you comment is not correct!I checked the load_vmware.sh script and found, that the script is looking for "VCENTER_PARALLEL_RUN" and not for "VMWARE_PARALLEL_RUN".So, the correct syntax in etc/.magic should be:VCENTER_PARALLEL_RUN=20

export VCENTER_PARALLEL_RUN

Furthermore, this variable ist not really considered in the scripts, so it makes no sense to use it as it is!

I've now added the following lines to the load_vmware.sh:if [ $VCENTER_PARALLEL_RUN -gt 1 ]; then

That works so far but the problem is not the time fetching the data from the VCenters, but the creation of the charts. The part of creating all the charts is sooo time consuming and takes more than 1 hour. How can we parallelise this?

while [ `ps -e | grep vmware_run.sh | wc -l` -ge $VCENTER_PARALLEL_RUN ];

do

echo "waiting ...."

sleep 1

done

fi

if [ $VCENTER_PARALLEL_RUN -eq 1 ]; then

$BINDIR/vmware_run.sh 2>>$ERRLOG | tee -a $INPUTDIR/logs/load_vmware.log

else

eval '$BINDIR/vmware_run.sh 2>>$ERRLOG | tee -a $INPUTDIR/logs/load_vmware.log' &

fi

BR Juergen -

Hi,VMWARE_PARALLEL_RUN is correct variable

# grep VMWARE_PARALLEL_RUN bin/*

# grep VMWARE_PARALLEL_RUN bin/*

bin/vmw2rrd.pl:if ( defined $ENV{VMWARE_PARALLEL_RUN} ) {

bin/vmw2rrd.pl: $PARALLELIZATION = $ENV{VMWARE_PARALLEL_RUN};Charts are not created in advance.What is your product version?Try to increase paralle run to 20.Let us know.

-

Hi Pavel,as I mentioned in the first line, we are actually on Version 5.07.

In this version is no VMWARE_PARALLEL_RUN !$ grep VMWARE_PARALLEL_RUN bin/vmw2rrd.pl

ok, now I've redone my changes and set $PARALLELIZATION = 20

$ grep PARALLEL bin/vmw2rrd.pl

my $PARALLELIZATION = 10;

# $PARALLELIZATION = 1;

if ( !$do_fork || $cycle_count == $PARALLELIZATION ) {

I'll send you a feedback when a few runs are finished.

BR Juergen -

Hi,also definitelly upgrade to 6.02, there was a lot of improvements and optimisations on the back-end.

-

Hi,

after one night, it is not working. All VMware charts are interrupted. All Power charts are ok.

We got the problem with the migration from AIX to Linux.

However, I've to discuss it first, but I think we will upgrade to the most actual version.

BR Juergen

-

Hi Pavel,

now we upgraded to 6.02. The problem is almost solved. We still have a one hour gap at midnight but only on all VMware-, not on the Power graphs.

The load.sh takes appx. 45 minutes and the daily_lpar_check takes appx. 30 minutes, together more than one hour. So the next start of load.sh is blocked by "There is already running another copy of load.sh, exiting ...".

Only for letting you know, we have the following amount of systems:

60 HMCs

174 ManagedSystems (Ps)

2005 LPARs

29 VCenter

449 VMware Hosts

13835 VMware VMs

Best regards,

Juergen

-

Hi,wow, it is prety big environment!Use this as a hot fix, it will resolve the issue-rwxrwxr-x 1 lpar2rrd lpar2rrd 22664 May 27 12:32 load.shGunzip it and copy to /home/lpar2rrd/lpar2rrd/ (755, lpar2rrd owner)If your web browser gunzips it automatically then just rename it: mv load.sh.gz load.shAssure that file size is the same as on above exampleIf you are on Linux then change interpretten on the first line of the script to /bin/bash

-

Hi Pavel,

it works!

Sending daily_lpar_check.pl into the background with nohup is an easy solution!

Thank you so far!

BR Juergen -

Hello,we have a same issue here (version 6.02). VMware data are dropping (missing every 2nd hour) because the load.sh does not finish within a hour. Most likely the cause is higher number of VMs (5000+) belonging to a one of the VCENTERs. Is there some workaround or 5000+ VMs per VCENTER is simply too much please?VMWARE_PARALLEL_RUN=20Thank you.Marek.

-

Hi,well, 5k+ VM is a lot but we have users with 12k+ where it is working.We making still new enahncements in this stuff to keep run load.sh under 1 hour.The best would be upgrade to the latest build to see if that helps:You can even increase VMWARE_PARALLEL_RUN in etc/.magicexport VMWARE_PARALLEL_RUN=40also assure you have anough of CPU resources on lpar2rrd serverif nothing heplsthen send us logs, we will check what we can do furtherNote a short problem description in the text field of the upload form.

cd /home/lpar2rrd/lpar2rrd # or where is your LPAR2RRD working dir

tar cvhf logs.tar logs tmp/*txt tmp/*json

gzip -9 logs.tar

Send us logs.tar.gz via https://upload.lpar2rrd.com

-

Hello Pavel,sorry for my delayed response: thank you for your suggestions. Finally I had a chance to work on a problem again and upgraded the lpar2rrd (6.16) but with no improvement.Could be we just simply don't have enough resources...?We have almost 3k LPARs (70 HMCs) and over 20k VMs (30 vCenters)and our lpar2rrd server is running on Linux RHEL with 32CPUs and 48GB ram.Thank you very much.

-

Hi Marek,can you upgrade to this version yet? We have just finished further enahncements especially for such big environments.Then go to /home/lpar2rrd/lpar2rrd (lpar2rrd working dir) and set this in etc/.magic:export VMWARE_PARALLEL_RUN=250chmod 644 etc/.magicWhen problem persit then logs plsNote a short problem description in the text field of the upload form.

cd /home/lpar2rrd/lpar2rrd # or where is your LPAR2RRD working dir

tar cvhf logs.tar logs tmp/*txt tmp/*json

gzip -9 logs.tar

Send us logs.tar.gz via https://upload.lpar2rrd.com

-

Hi,ok, I will give it a try. First I will crank up the _PARALLEL_RUN value. 250 seems quite like a big difference, that might help. But what about our CPU/RAM setup, is it ok for such environment please?As for the version - we have 6.16 which I've downloaded a week ago (26.5.) from your download section.If it persists we can check logs.

Thank you. -

it is ok, try it, your HW setup is fine for that

-

Hi,perhaps a silly question, but is it ok to remove the rule from load.sh/load_vmware.sh which checks if previous instance is still running so the next run will start no matter what? We've tried that and so far it is running fine with no gaps. Can we expect any side effects?Thanks.

Howdy, Stranger!

Categories

- 1.7K All Categories

- 115 XorMon

- 26 XorMon Original

- 171 LPAR2RRD

- 14 VMware

- 19 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle