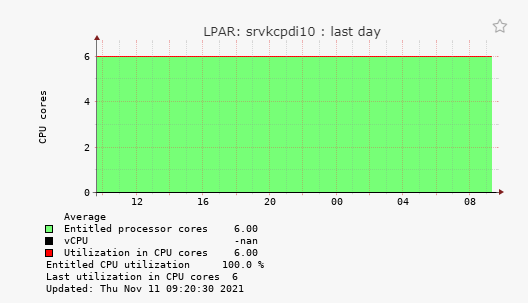

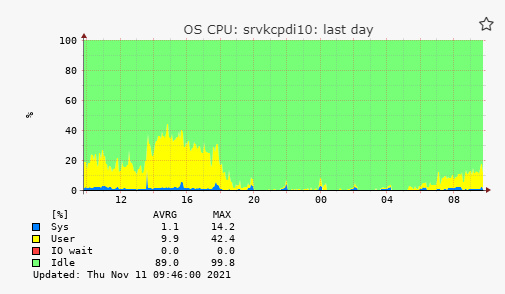

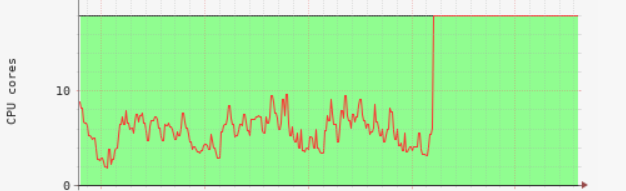

CPU (by HMC) and CPU OS graphs differ badly

Comments

-

Hi,what is your lpar2rrd version?do you use HMC CLI (ssh) or HMC REST API?

-

7.30

REST API -

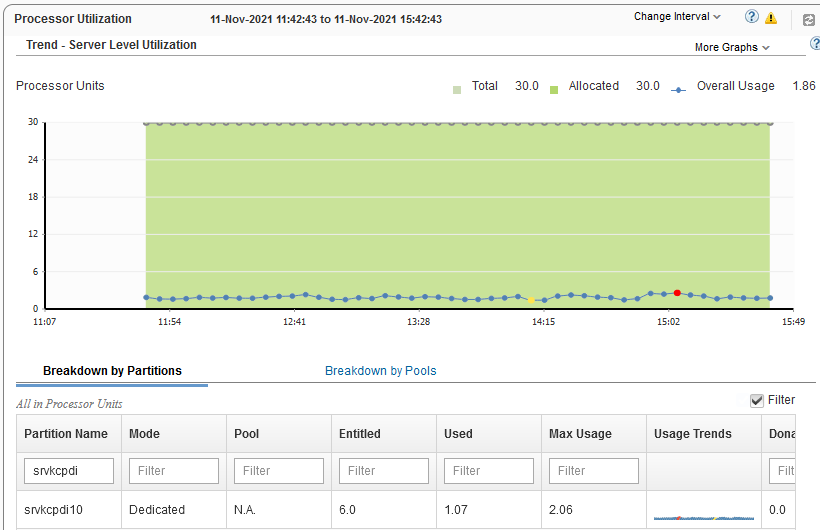

what is Processor Sharing properties, like this?

Allow when partition is inactive.Allow when partition is active.HMC version is 8 or 9?

-

Processor Sharing is Allow when partition is inactive.

HMC v9 -

when you check graphs on the HMC directly, do you see normal utilisation or a line like in lpar2rrd?

-

HMC displays an adequate graph.

-

Hello: Maybe it's something similar that happened to me, see:

-

Hi, this is probably related to the issue you mentioned. Can you verify the idle cycles and utilized cycles?

lslparutil -r lpar -m SERVER_NAME --filter "event_types=sample,lpar_names=LPAR_NAME"

-

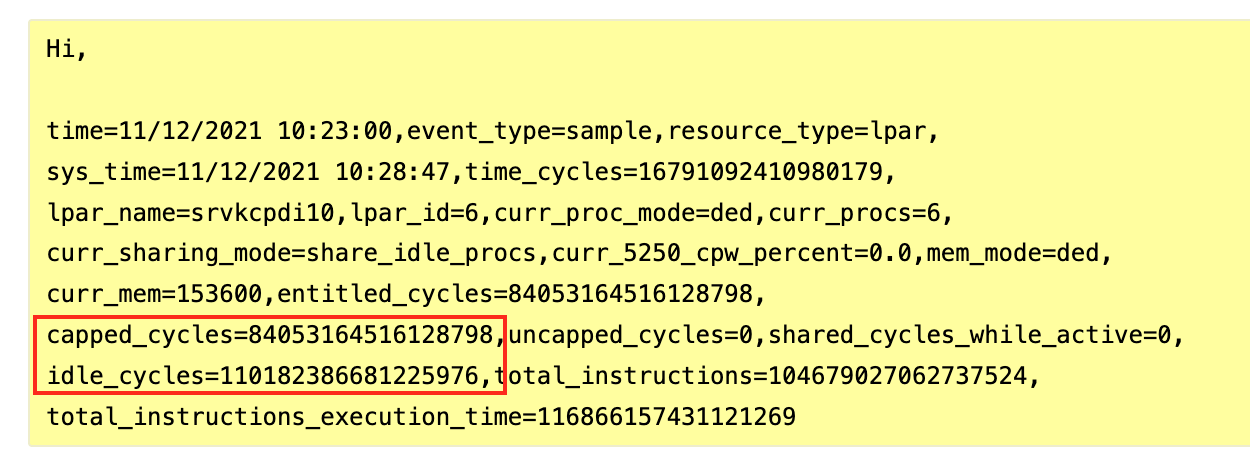

Hi, time=11/12/2021 10:23:00,event_type=sample,resource_type=lpar, sys_time=11/12/2021 10:28:47,time_cycles=16791092410980179, lpar_name=srvkcpdi10,lpar_id=6,curr_proc_mode=ded,curr_procs=6, curr_sharing_mode=share_idle_procs,curr_5250_cpw_percent=0.0,mem_mode=ded, curr_mem=153600,entitled_cycles=84053164516128798, capped_cycles=84053164516128798,uncapped_cycles=0,shared_cycles_while_active=0, idle_cycles=110182386681225976,total_instructions=104679027062737524, total_instructions_execution_time=116866157431121269

-

Another user solved the problem with IBM that admitted there is a flaw when using LPM on some firmware versions.

There are possible solutions:IBM Support notes that there is a recently discovered flaw related to partition LPM and counts with dedicated processors. Its repair is already being worked on but there is no certainty when it is released.At the moment, the alternatives to correct the counters are:1.- The least impact and if it has the LPM capacity. You can migrate the non-active Lpar. Then Activate the destination server. Then (if you prefer) you can return it to the original server.2.- The most drastic ways would be to turn the server off and on, or to delete and recreate the partitions.I personally managed to solve it with option 1.

Can you try the option 1 solution? -

I'm going to, but did not yet.

I wonder if LPAR2RRD could work around that bug like HMC do? That flaw doesn't make HMC to plot corrupted graphs.

The solution proposed turn LPM (Live Partition Mobility) feature into Dead Partition Mobility indeed. Not so good point. -

Hello:

The problem is with the counters of the affected LPAR in the same HMC. It cannot be that the idle_cycles counter is greater than capped_cycles (I highlight it in red box). I solved it with alternative 1 already indicated. "1.- The least impact and if it has the LPM capacity. You can migrate the non-active Lpar. Then Activate the destination server. Then (if you prefer) you can return it to the original server."

"1.- The least impact and if it has the LPM capacity. You can migrate the non-active Lpar. Then Activate the destination server. Then (if you prefer) you can return it to the original server."

-

If you prefer, there will be an IBM support case to confirm the proposed solution or for them to indicate any additional alternatives.

-

Hi mcaroca,

That isn't a solution, that's a workaround. A solution that ruins all the benefits of LPM isn't really a solution, IMHO. -

Hi,basically it is a bug in the HMC, ask IBM for a solution, idle cycles are higher than capped cycles, it should not be.BTW try to switch to HMC REST API access, it might work properly

-

Pavel,

My point is that this bug doesn't deny HMC to draw correct graphs, so why LPAR2RRD cannot?

I do utilize REST API from the very beginning. -

Hello,

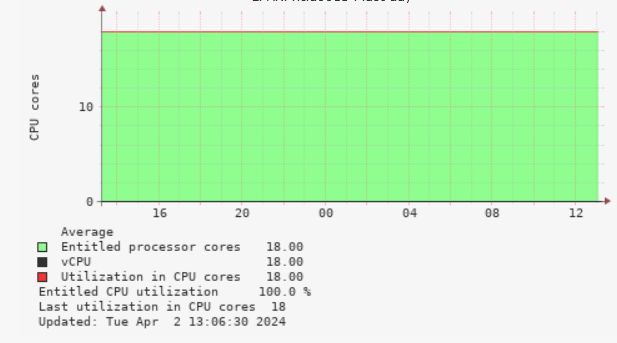

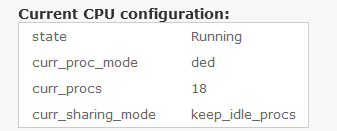

do you have any further information on this topic, or are the proposed solutions still the only solution please? I have the same problem (CPU 100%, although when checking directly on the lpar the load is fine). It also has a dedicated cores (but the capped_cycles counter is greater than idle_cycles).

It actually happened after HMC upgrade (current version: HMC V10R3 M1051)

I'm using version 7.30...

Thank you.

-

upgrade to the latest v7.80-1 and let us know. Do you use HMC REST API or CLI (ssh)

-

Ok, I will try it.

CLI (ssh)

Thanks.

-

CLI does not help

-

Hello,

sorry for the delay - switching to the HMC REST API actually helped!

Thank you very much,

Howdy, Stranger!

Categories

- 1.7K All Categories

- 115 XorMon

- 26 XorMon Original

- 171 LPAR2RRD

- 14 VMware

- 19 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle