IBM AIX POWER systems VIO - Modernization of NPIV stack: from Single to Multiple Queue Support

LPAR2RRD team - first off thanks for all you do - excellent product - can your team create a dashboard or track these specific AIx / power / vio metrics ? this IBM configuration, once achieved, will be incredible for the customer to see and track - details in this link... https://community.ibm.com/community/user/power/blogs/ninad-palsule1/2021/07/26/powervm-npiv-multi-queue-support - THANKS

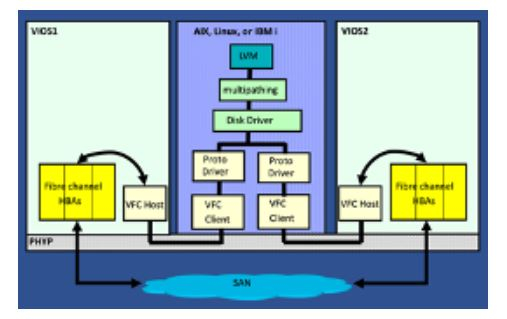

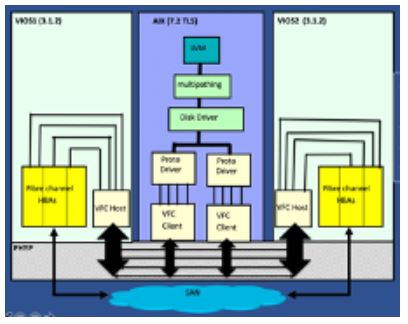

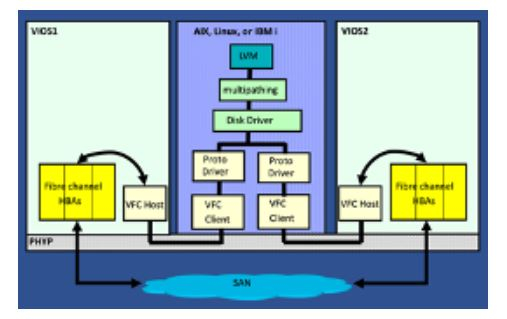

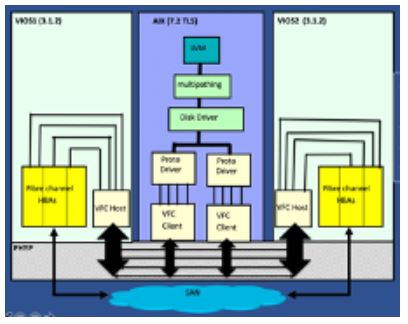

The following figure shows the newly added support for end to end multiple queue. AIX FC driver enabled multiple work queues in AIX protocol and adapter drivers. The client and VIOS partitions multiple subordinate queues, one per IO queue.

- Immediate gains from new multi-queue stack

- Increase in IOPS: Client partition is now capable of pushing lot more IOs in parallel.

- Less number of virtual adapters needed: Traditionally customer added multiple virtual adapters to push more IOs in parallel but now single initiator port is far more capable to push more IOs due to multiple queues so you may not need multiple virtual adapters.

- IO processing in process context: The IO processing is moved to process context from interrupt context hence we have better control over how many IOs can be processed before requesting to send next interrupt. This reduces interrupt overheads and improves the CPU utilization. This also reduces CPU locking done by interrupt if it had to process multiple IOs. The threads are freely migratable to different CPUs. This improves overall system performance.

The following figure shows the newly added support for end to end multiple queue. AIX FC driver enabled multiple work queues in AIX protocol and adapter drivers. The client and VIOS partitions multiple subordinate queues, one per IO queue.

Comments

-

Hi,as per the docu in the link, performance stats on VIOS are available only for root user, what means no way for us (we do not want to run the agent under root, sudo is also no preferable solution).No idea if that perf data would be available through HMC REST API, we have no docu, we have no environment for testing of it ...-----------------------------# cat /proc/sys/adapter/vfc/vfchost0/stats | grep "Commands received"

Commands received = 0

Commands received = 8692844

Commands received = 10236718

Commands received = 11848144

Commands received = 13025270

Commands received = 11541206

Commands received = 10606106

Commands received = 6627340

Commands received = 8881815

NOTE: You need to be root to access the statistics from proc filesystem.------------------------------any other idea for monitoring apart of that above example? -

Pavel - thanks for looking into this for all of us -- great response time from you and the team .. as always.... " any other idea for monitoring apart of that above example?" --- i guess we will just see an increase in SAN, SAN IOPS, etc once all the checkboxes are met for the IBM AIX POWER Single to Multiple Queue Support

-

sorry, no idea

Howdy, Stranger!

Categories

- 1.7K All Categories

- 115 XorMon

- 26 XorMon Original

- 172 LPAR2RRD

- 14 VMware

- 19 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle