Intermittent drops in charts with LPAR2RRD V6.11for IBM Power

Just completed an installation of LPAR2rrd V6.11 to monitor our Power systems.

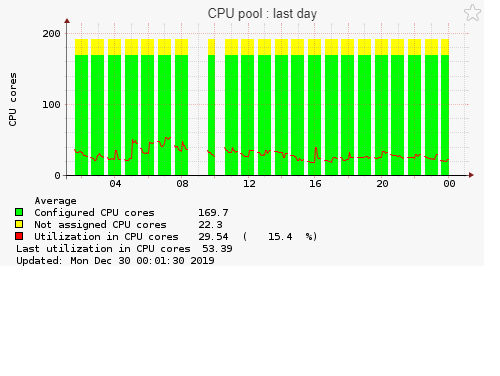

With the exception of a few Power servers, the majority show intermittent gaps in all their charts.

Has anyone experienced something similar in an LPAR2RRD configuration for IBM Power.

A summary of my setup is listed below:

LPAR2RRD version 6.11

LPAR2RRD edition free

OS info AIX (my lab LPAR for this LPAR2RRD installation is running AIX 7.2.2.2)

Perl version This is perl, v5.8.8 built for ppc-thread-multi

Web server info Apache/2.4.18 (Unix) OpenSSL/1.0.2o-fips

RRDTOOL version 1.4.8

RRDp version 1.4008

Comments

-

Hi,most probably you have wrong time (TZ) on the HMC.Check if HMC time and lslparutil time is more less same on below output and is correct.Fix it if it is not, do not forget reboot the HMC when you change the timezone, it is a must.su - lpar2rrdcd /home/lpar2rd/lpar2rrd./bin/sample_rate.sh

-

Pavel,

As I'm using REST API I get the following message:

No HMC CLI (ssh) based hosts found in the UI Host Configuration. Add your HMC CLI ssh hosts via the UI.Comparing the time between one of the HMC's skipping chart data and my LPAR2RRD lab lpar shows they're nearly synced:

hscroot@lxsc87hmc0c01:~> date

Mon Dec 30 13:48:10 UTC 2019[/lpar2rrd]@axscz2vpar12

$date

Mon Dec 30 13:48:10 UTC 2019 -

ok, send us logsNote a short problem description in the text field of the upload form.

cd /home/lpar2rrd/lpar2rrd # or where is your LPAR2RRD working dir

tar cvhf logs.tar logs tmp/*txt tmp/*json

gzip -9 logs.tar

Send us logs.tar.gz via https://upload.lpar2rrd.com

-

Thank You Pavel for your attention.

I have submitted the requested logs. -

Lack of CPU resources.

With REST API we must get data every 20 minutes (HMC saves it for last 30 minutes only) and when load does not fit then the gap occurs.

-

I have eliminated or reduced many of the chart gaps since increasing CPU and memory resources on the LPAR2RRD monitoring LPAR but I still have a few remaining managed systems that continue to display drops. I have verified that no time or time zone discrepancies exist. I will leave for a few days to see how it looks.

-

Hi,ok, let us know when it still appears.If so send us logs and a screenshot from the UI.

-

Pavel,

All monitoring charts with REST API have been corrected.

In summary, the three things that need be verified for proper chart display is the HMC time & time zone be synced to the LPAR2RRD server, the HMC sampling rate be set to 60 for all your HMC's using REST API, and assign sufficient CPU & Memory resources to the LPAR2RRD server.

Thank You kindly Pavel for your time and attention. -

Hello,

I have exactly the same problem, also using REST API and also have defragmented graps on all my physical servers from one site.

All steps in this post was already done by me, and the problem still persist. Time is correct and the same beetween HMC and RRD server,

cpu i mem resources for RRD are higher than it needs and lparutil is set to 60 for all physical frames .

This didn't fix my problem, can you help me find out why this occurs and how to prevent it ? Thanks.

##I'm using newest version and my rrd env. looks like this:

LPAR2RRD version 6.15

LPAR2RRD edition free

OS info AIX

Perl version This is perl, v5.8.8 built for ppc-thread-multi

Web server info Apache/2.4.39 (Unix)

RRDTOOL version 1.7.0

RRDp version 1.6999

-

Hi Piotr,send us logs1. screenshot from the UI2. logsNote a short problem description in the text field of the upload form.

cd /home/lpar2rrd/lpar2rrd # or where is your LPAR2RRD working dir

tar cvhf logs.tar logs tmp/*txt tmp/*json

gzip -9 logs.tar

Send us logs.tar.gz via https://upload.lpar2rrd.com

-

thanks. screen and logs are uploaded.

Howdy, Stranger!

Categories

- 1.7K All Categories

- 115 XorMon

- 26 XorMon Original

- 171 LPAR2RRD

- 14 VMware

- 19 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle