Load issues after upgrade to 6.10

Hello,

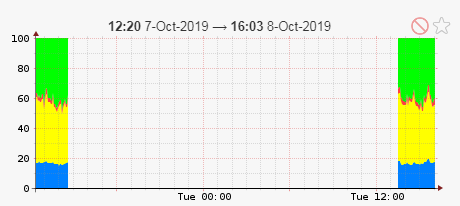

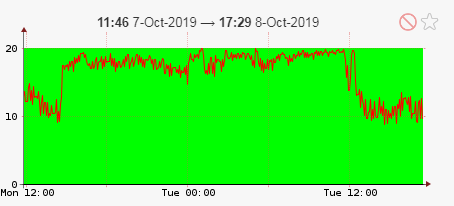

After upgrade to 6.10 on server and client, we were facing issues regarding comunication with the server, in all lpars except one. Also it was consuming CPU with lscfg command.

We reinstalled rpm on clients, restarted processes, restarted xorux server and the issue persist.

Can you help me with this?

lpar2rrd@(/home/lpar2rrd)# /usr/bin/perl /opt/lpar2rrd-agent/lpar2rrd-agent.pl -d xorux

After upgrade to 6.10 on server and client, we were facing issues regarding comunication with the server, in all lpars except one. Also it was consuming CPU with lscfg command.

We reinstalled rpm on clients, restarted processes, restarted xorux server and the issue persist.

Can you help me with this?

Name PID CPU% PgSp Owner

lscfg 22611152 2.5 23.9M lpar2rrd

lscfg 24903766 2.5 25.1M lpar2rrd

lscfg 29033406 2.5 24.1M lpar2rrd

lscfg 7735148 2.4 25.8M lpar2rrd

lscfg 47121364 2.3 24.4M lpar2rrd

lscfg 23659020 2.3 26.0M lpar2rrd

lscfg 6293730 2.1 24.1M lpar2rrd

lpar2rrd@(/home/lpar2rrd)# /usr/bin/perl /opt/lpar2rrd-agent/lpar2rrd-agent.pl -d xorux

LPAR2RRD agent version:6.10-0

Tue Oct 8 11:39:13 2019

main timeout 50

JOB TOP setting: MAX_JOBS=20, LOAD_LIMIT=10, LOAD_LIMIT_MIN=1, PROCESSES_INCLUDE= PROCCESS_ARGS=args

uname -W 2>/dev/null

lsattr -El `lscfg -lproc* 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err|cut -d ' ' -f3|head -1` -a frequency -F value 2>/dev/null

lsdev -Cc processor 2>/dev/null

bindprocessor -q 2>/dev/null

vmstat -v 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

svmon -G 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lsattr -El sys0 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lparstat -i

vmstat -sp ALL 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lsps -s 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

amepat 2>/dev/null

lsdev -C 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

ifconfig -a 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

could not resolve Paging 1

entstat -d en0 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err|egrep 'Bytes:|^Packets:'

fork iostat 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

iostat fork timeout 20

iostat -Dsal 5 1 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

Fork wlmstat 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

wlmstat fork timeout 20

/usr/sbin/wlmstat 5 1 2>/dev/null

/usr/sbin/wlmstat -M 2>/dev/null

lsattr -a attach -El fscsi0 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

fcstat fcs0 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lsattr -a attach -El fscsi1 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

fcstat fcs1 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lsattr -a attach -El fscsi2 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

fcstat fcs2 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

lsattr -a attach -El fscsi3 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

fcstat fcs3 2>>/var/tmp/lpar2rrd-agent-xorux-lpar2rrd.err

Wlmstat fork ended successfully

lsdev -Ccdisk: AIX disk ID searching

: found some ids

Tue Oct 8 11:40:04 2019: agent timed out after : 50 seconds /opt/lpar2rrd-agent/lpar2rrd-agent.pl:426

Terminated

Thanks in advance,

Regards.

Comments

-

Hi,we are trying to get volume UUID vialscfg -vl <disk name>Ho long does it run from command line?

-

Yes I saw that command in ps - ef executed by lpar2rrd, randomly in all disks. but we have a lot of disks, i.e 9665 disks in 1 lpar.

The issue was during 24hs so I decided to downgrade to 6.02 to avoid CPU consumption on production machines. This version is working properly.

-

Hi,the agent gets all disk UUIDs.it is something we will need in the near future for mapping disks between storage/server (lpar/vm)It runs every hour.We have just change it to once a day. Would that be still unacceptable for your env?10k disk devices is really quite huge numbers, I have never seen that.I am affraid that even once a day it is something what can make problems

As s workaround would be skipping that on some parameters.

As s workaround would be skipping that on some parameters. -

I've never had issue with any version. It just happens with 6.10.

So the UUID was get without issues in version 6.02

We have 5 lpars with the same configuration, but worked in 1 lpar. The others had 'agent timed out after : 50 seconds' message.

It was "hanged" during a day until I change version

consuming high CPU

Yes, 10k hdisks is a crazy thing. Veritas multipath generate that number of devices. The server has 600 disks.

-

6.02 and olders do not get UUIDs, it is was introduced in 6.10

Howdy, Stranger!

Categories

- 1.7K All Categories

- 115 XorMon

- 26 XorMon Original

- 171 LPAR2RRD

- 14 VMware

- 19 IBM i

- 2 oVirt / RHV

- 5 MS Windows and Hyper-V

- Solaris / OracleVM

- 1 XenServer / Citrix

- Nutanix

- 8 Database

- 2 Cloud

- 10 Kubernetes / OpenShift / Docker

- 140 STOR2RRD

- 20 SAN

- 7 LAN

- 19 IBM

- 7 EMC

- 12 Hitachi

- 5 NetApp

- 17 HPE

- 1 Lenovo

- 1 Huawei

- 3 Dell

- Fujitsu

- 2 DataCore

- INFINIDAT

- 4 Pure Storage

- Oracle